Wednesday, March 16, 2022 | 12:00pm ET

Speaker: Sean Lie, Cerebras Systems

Please use this link for more information on the Microsystems Technology Laboratories (MTL) Spring Seminar Series.

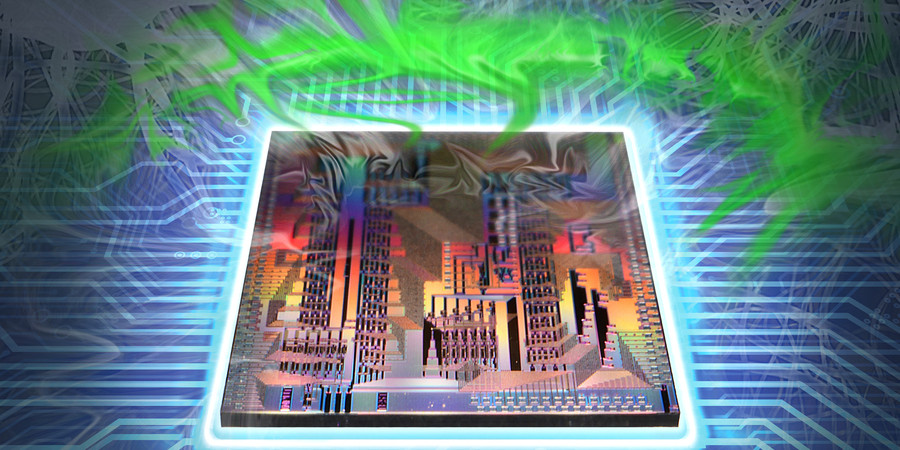

Abstract: The compute and memory demands from state-of-the-art neural networks have increased several orders of magnitude in just the last couple of years, and there’s no end in sight. Traditional forms of scaling chip performance are necessary but far from sufficient to run the ML models of the future. In addition to the chip, end-to-end system and software co-design is the only way to satisfy the performance demand. It requires vertical design across the entire technology stack: from the chip architecture, to the system and cluster design, through the compiler and software, and even unlocking the flexibility to rethink the neural network algorithms themselves.

In this talk, we will explore the fundamental properties of neural networks and why they are not well served by traditional architectures. We will examine how co-design can relax the traditional boundaries between technologies and enable designs specialized for neural networks with new architectural capabilities and performance. This co-design approach enables innovations such as wafer-scale chips, core sparse datapaths, specialized memories and interconnects, novel software mappings and execution models, and highly efficient sparse neural networks. We will explore this rich new design space using the Cerebras architecture as a case study, highlighting design principles and tradeoffs that enable the ML models of the future.

Speaker Bio: Sean Lie is co-founder and Chief Hardware Architect at Cerebras Systems, which builds high performance ML accelerators. Prior to Cerebras, Sean was a lead architect at SeaMicro and later a Fellow and Chief Data Center Architect at AMD. He holds a BS and MEng in Electrical Engineering and Computer Science from MIT. Sean’s primary interests are in high performance computer architecture and hardware/software codesign in areas including transactional memory, networking, storage, and ML accelerators.

Speaker Bio: Sean Lie is co-founder and Chief Hardware Architect at Cerebras Systems, which builds high performance ML accelerators. Prior to Cerebras, Sean was a lead architect at SeaMicro and later a Fellow and Chief Data Center Architect at AMD. He holds a BS and MEng in Electrical Engineering and Computer Science from MIT. Sean’s primary interests are in high performance computer architecture and hardware/software codesign in areas including transactional memory, networking, storage, and ML accelerators.

Explore

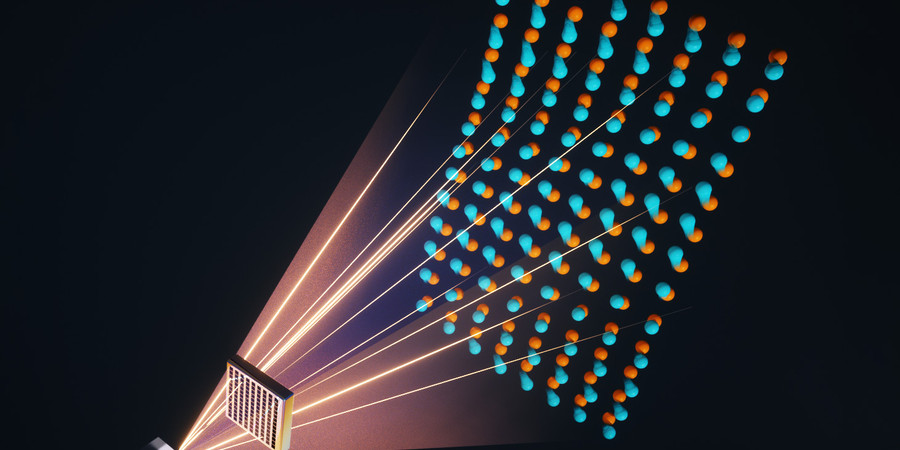

Photonic Processor Could Enable Ultrafast AI Computations with Extreme Energy Efficiency

Adam Zewe | MIT News

This new device uses light to perform the key operations of a deep neural network on a chip, opening the door to high-speed processors that can learn in real-time.

AI Method Radically Speeds Predictions of Materials’ Thermal Properties

Adam Zewe | MIT News

The approach could help engineers design more efficient energy-conversion systems and faster microelectronic devices, reducing waste heat.

A New Way to Let AI Chatbots Converse All Day without Crashing

Adam Zewe | MIT News

Researchers developed a simple yet effective solution for a puzzling problem that can worsen the performance of large language models such as ChatGPT.