Kim Martineau | MIT Quest for Intelligence

As more artificial intelligence applications move to smartphones, deep learning models are getting smaller to allow apps to run faster and save battery power. MIT researchers have proposed a technique for shrinking deep learning models that they say is simpler and produces more accurate results than state-of-the-art methods. The technique follows these simple steps: Train the model, prune its weakest connections, retrain the model at its fast, early training rate, and repeat, until the model is as tiny as you want.

Complete article from MIT News.

Explore

AI Tool Generates High-Quality Images Faster Than State-of-the-Art Approaches

Adam Zewe | MIT News

Researchers fuse the best of two popular methods to create an image generator that uses less energy and can run locally on a laptop or smartphone.

New Security Protocol Shields Data From Attackers During Cloud-based Computation

Adam Zewe | MIT News

The technique leverages quantum properties of light to guarantee security while preserving the accuracy of a deep-learning model.

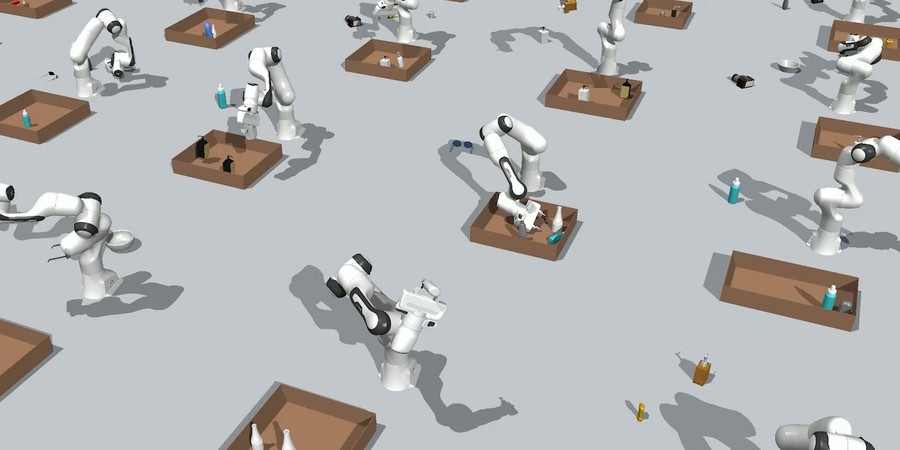

New Technique Helps Robots Pack Objects into a Tight Space

Adam Zewe | MIT News

Researchers coaxed a family of generative AI models to work together to solve multistep robot manipulation problems.