Principal Investigators: Vivienne Sze, Joel Emer

The architectural configuration of an ANN and its performance and energy efficiency hinge on many design decisions that ultimately emerge from the characteristics of the underlying synapse technology. Today, there is no way to rapidly and systematically explore the large design space of possible ANN accelerator architectures that a given device technology can support. This project pursues an integrated framework that includes energy-modeling and performance evaluation tools to systematically explore and estimate the energy-efficiency and performance of ANN architectures with full consideration of the electrical characteristics of the synaptic elements and interface circuits. This framework will be used to systematically guide the exploration of ANN accelerator design space all the way from the device backbone to the architectural level.

Explore

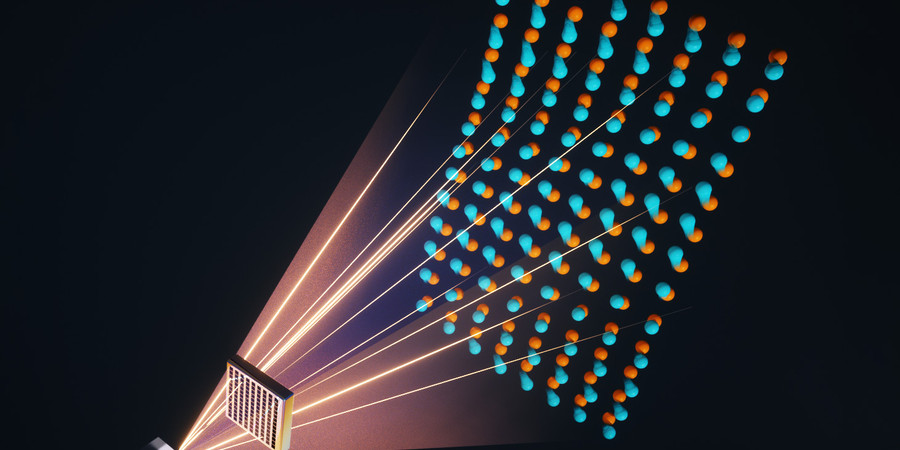

Photonic Processor Could Enable Ultrafast AI Computations with Extreme Energy Efficiency

Adam Zewe | MIT News

This new device uses light to perform the key operations of a deep neural network on a chip, opening the door to high-speed processors that can learn in real-time.

AI Method Radically Speeds Predictions of Materials’ Thermal Properties

Adam Zewe | MIT News

The approach could help engineers design more efficient energy-conversion systems and faster microelectronic devices, reducing waste heat.

A New Way to Let AI Chatbots Converse All Day without Crashing

Adam Zewe | MIT News

Researchers developed a simple yet effective solution for a puzzling problem that can worsen the performance of large language models such as ChatGPT.