February 13, 2024

When a human-AI conversation involves many rounds of continuous dialogue, the powerful large language machine-learning models that drive chatbots like ChatGPT sometimes start to collapse, causing the bots’ performance to rapidly deteriorate.

A team of researchers from MIT and elsewhere has pinpointed a surprising cause of this problem and developed a simple solution that enables a chatbot to maintain a nonstop conversation without crashing or slowing down.

Their method involves a tweak to the key-value cache (which is like a conversation memory) at the core of many large language models. In some methods, when this cache needs to hold more information than it has capacity for, the first pieces of data are bumped out. This can cause the model to fail.

By ensuring that these first few data points remain in memory, the researchers’ method allows a chatbot to keep chatting no matter how long the conversation goes.

Complete article from MIT News.

Explore

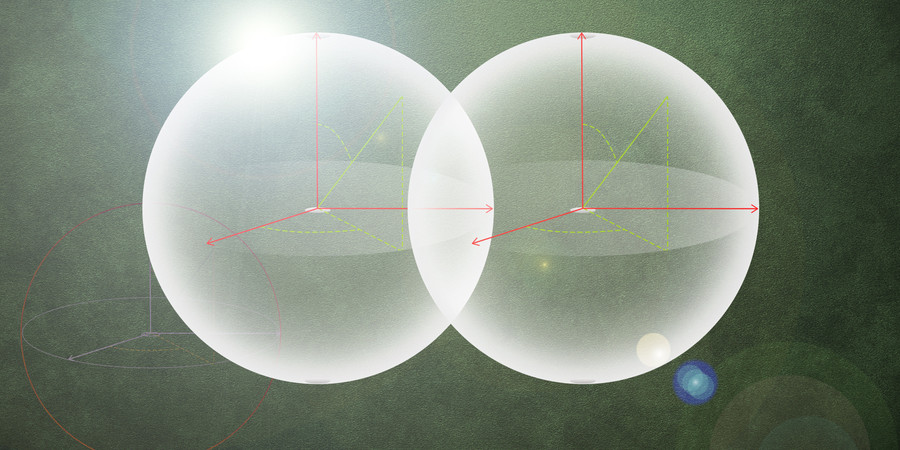

MIT Engineers Advance Toward a Fault-tolerant Quantum Computer

Adam Zewe | MIT News

Researchers achieved a type of coupling between artificial atoms and photons that could enable readout and processing of quantum information in a few nanoseconds.

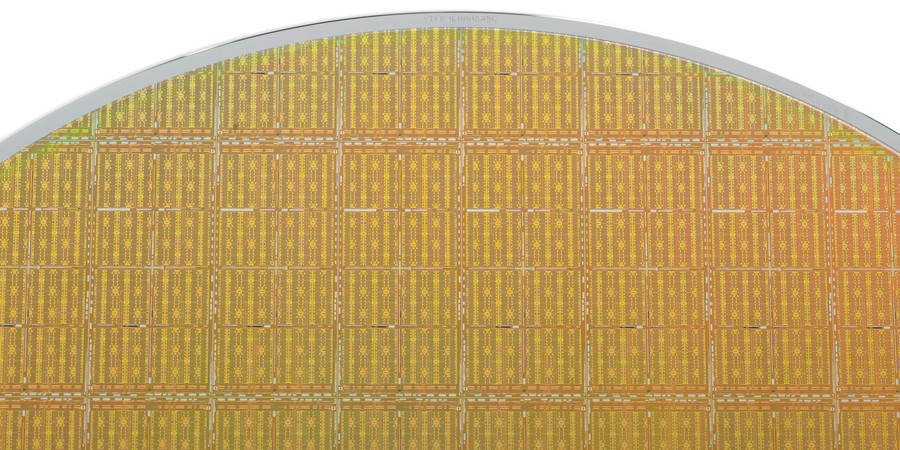

New Chip Tests Cooling Solutions for Stacked Microelectronics

Kylie Foy | MIT Lincoln Laboratory

Preventing 3D integrated circuits from overheating is key to enabling their widespread use.

Analog Compute-in-Memory Accelerators for Deep Learning

Wednesday, April 30, 2025 | 12:00 - 1:00pm ET

Hybrid

Zoom & MIT Campus