March 21, 2025

The ability to generate high-quality images quickly is crucial for producing realistic simulated environments that can be used to train self-driving cars to avoid unpredictable hazards, making them safer on real streets.

But the generative artificial intelligence techniques increasingly being used to produce such images have drawbacks. One popular type of model, called a diffusion model, can create stunningly realistic images but is too slow and computationally intensive for many applications. On the other hand, the autoregressive models that power LLMs like ChatGPT are much faster, but they produce poorer-quality images that are often riddled with errors.

Researchers from MIT and NVIDIA developed a new approach that brings together the best of both methods. Their hybrid image-generation tool uses an autoregressive model to quickly capture the big picture and then a small diffusion model to refine the details of the image.

Complete article from MIT News.

Explore

New Security Protocol Shields Data From Attackers During Cloud-based Computation

Adam Zewe | MIT News

The technique leverages quantum properties of light to guarantee security while preserving the accuracy of a deep-learning model.

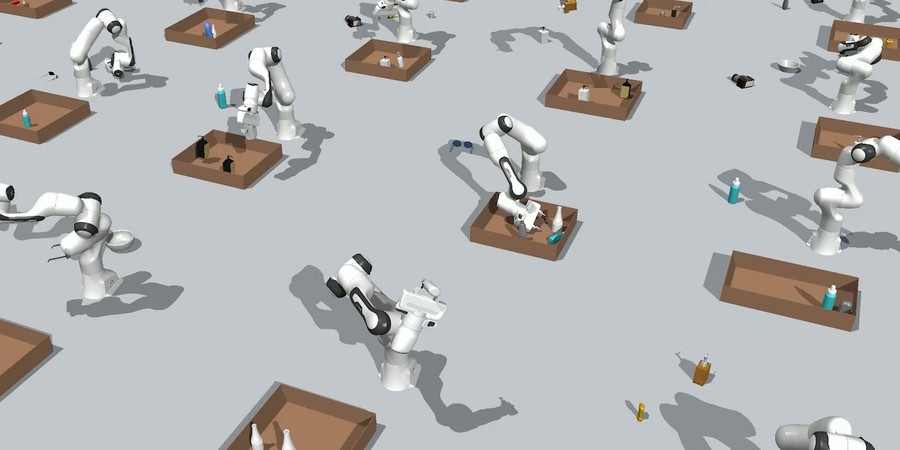

New Technique Helps Robots Pack Objects into a Tight Space

Adam Zewe | MIT News

Researchers coaxed a family of generative AI models to work together to solve multistep robot manipulation problems.

Researchers Create a Tool for Accurately Simulating Complex Systems

Adam Zewe | MIT News Office

The system they developed eliminates a source of bias in simulations, leading to improved algorithms that can boost the performance of applications.