Alex Wilkins | New Scientist

July 28, 2022

A resistor that works in a similar way to nerve cells in the body could be used to build neural networks for machine learning.

Many large machine learning models rely on increasing amounts of processing power to achieve their results, but this has vast energy costs and produces large amounts of heat.

One proposed solution is analogue machine learning, which works like a brain by using electronic devices similar to neurons to act as the parts of the model. However, these devices have so far not been fast, small or efficient enough to provide advantages over digital machine learning.

Murat Onen at the Massachusetts Institute of Technology and his colleagues have created a nanoscale resistor that transmits protons from one terminal to another. This functions a bit like a synapse, a connection between two neurons, where ions flow in one direction to transmit information. But these “artificial synapses” are 1000 times smaller and 10,000 times faster than their biological counterparts.

Complete article from New Scientist.

Explore

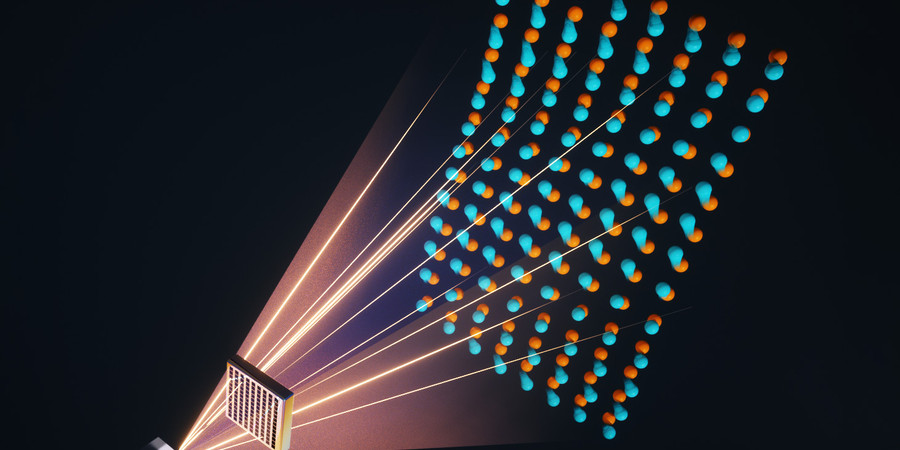

Photonic Processor Could Enable Ultrafast AI Computations with Extreme Energy Efficiency

Adam Zewe | MIT News

This new device uses light to perform the key operations of a deep neural network on a chip, opening the door to high-speed processors that can learn in real-time.

AI Method Radically Speeds Predictions of Materials’ Thermal Properties

Adam Zewe | MIT News

The approach could help engineers design more efficient energy-conversion systems and faster microelectronic devices, reducing waste heat.

A New Way to Let AI Chatbots Converse All Day without Crashing

Adam Zewe | MIT News

Researchers developed a simple yet effective solution for a puzzling problem that can worsen the performance of large language models such as ChatGPT.