Principal Investigators: Luqiao Liu, Marc Baldo

Networks formed by devices with intrinsic stochastic switching properties can be used to build Boltzmann machine, which has great efficiencies compared with traditional von Neumann architecture for cognitive computing due to the benefit from statistical mechanics of building blocks. In Boltzmann machine, each node within the network can switch to one of the two energy states with the switching probability determined stochastically through its interactions with other nodes within the network. This collective behavior of the interactive networks serves as an ideal platform for addressing many optimization and machine learning problems. Spin torque magnetic tunnel junctions are ideal candidates for realizing these Boltzmann networks due to its great tunability, small footprint, fast speed as well as their statistical-physics-governed stochastic switching. In this project, researchers will build thermally metastable three terminal magnetic tunnel junction devices for realizing these networks. The switching probability of each device – magnetic tunnel junction is determined by the states of other devices within the network through programmable weight factors that describe the interaction strength among different nodes. By integrating stochastic tunnel junctions and programmable resistive devices, the researchers will build a scalable Boltzmann machine with ultralow power consumption and fast speed.

Explore

Photonic Processor Could Enable Ultrafast AI Computations with Extreme Energy Efficiency

Adam Zewe | MIT News

This new device uses light to perform the key operations of a deep neural network on a chip, opening the door to high-speed processors that can learn in real-time.

AI Method Radically Speeds Predictions of Materials’ Thermal Properties

Adam Zewe | MIT News

The approach could help engineers design more efficient energy-conversion systems and faster microelectronic devices, reducing waste heat.

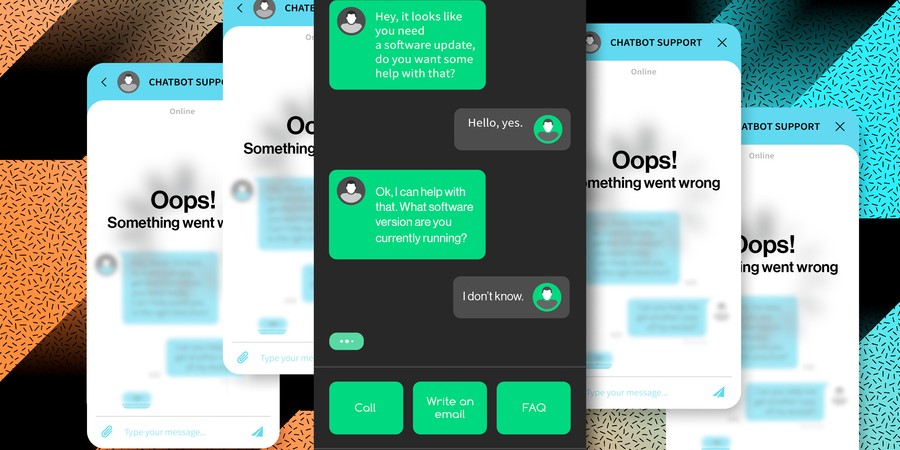

A New Way to Let AI Chatbots Converse All Day without Crashing

Adam Zewe | MIT News

Researchers developed a simple yet effective solution for a puzzling problem that can worsen the performance of large language models such as ChatGPT.