Lauren Hinkel | MIT-IBM Watson AI Lab

November 29, 2022

Graphs, a potentially extensive web of nodes connected by edges, can be used to express and interrogate relationships between data, like social connections, financial transactions, traffic, energy grids, and molecular interactions. As researchers collect more data and build out these graphical pictures, researchers will need faster and more efficient methods, as well as more computational power, to conduct deep learning on them, in the way of graph neural networks (GNN).

Now, a new method, called SALIENT (SAmpling, sLIcing, and data movemeNT), developed by researchers at MIT and IBM Research, improves the training and inference performance by addressing three key bottlenecks in computation. This dramatically cuts down on the runtime of GNNs on large datasets, which, for example, contain on the scale of 100 million nodes and 1 billion edges. Further, the team found that the technique scales well when computational power is added from one to 16 graphical processing units (GPUs). The work was presented at the Fifth Conference on Machine Learning and Systems.

Complete article from MIT News.

Explore

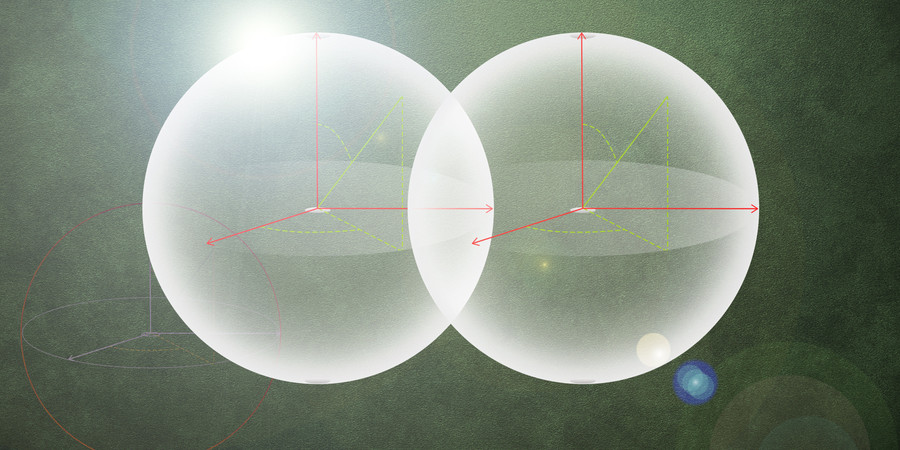

MIT Engineers Advance Toward a Fault-tolerant Quantum Computer

Adam Zewe | MIT News

Researchers achieved a type of coupling between artificial atoms and photons that could enable readout and processing of quantum information in a few nanoseconds.

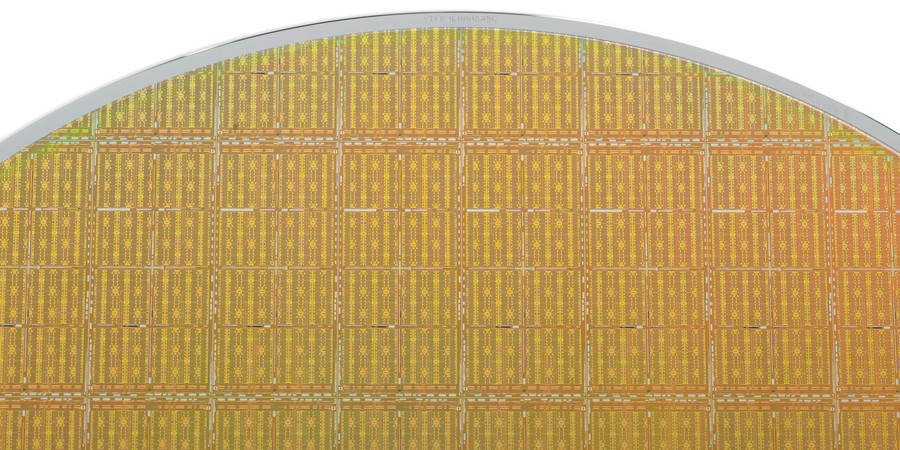

New Chip Tests Cooling Solutions for Stacked Microelectronics

Kylie Foy | MIT Lincoln Laboratory

Preventing 3D integrated circuits from overheating is key to enabling their widespread use.

Analog Compute-in-Memory Accelerators for Deep Learning

Wednesday, April 30, 2025 | 12:00 - 1:00pm ET

Hybrid

Zoom & MIT Campus