Adam Zewe | MIT News Office

October 20, 2022

Ask a smart home device for the weather forecast, and it takes several seconds for the device to respond. One reason this latency occurs is because connected devices don’t have enough memory or power to store and run the enormous machine-learning models needed for the device to understand what a user is asking of it. The model is stored in a data center that may be hundreds of miles away, where the answer is computed and sent to the device.

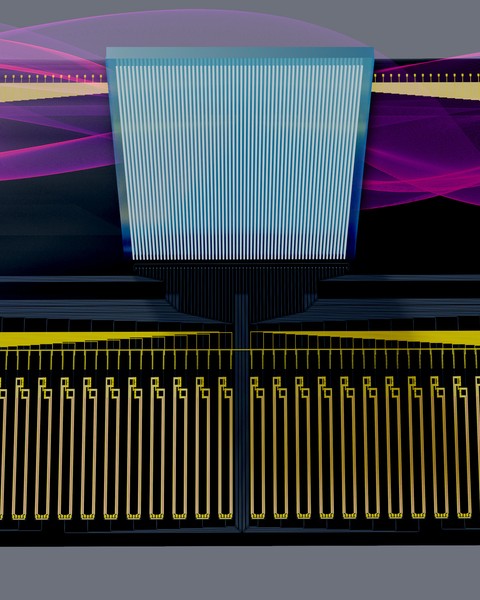

MIT researchers have created a new method for computing directly on these devices, which drastically reduces this latency. Their technique shifts the memory-intensive steps of running a machine-learning model to a central server where components of the model are encoded onto light waves.

Complete article from MIT News.

Explore

Energy-Efficient and Environmentally Sustainable Computing Systems Leveraging Three-Dimensional Integrated Circuits

Wednesday, May 14, 2025 | 12:00 - 1:00pm ET

Hybrid

Zoom & MIT Campus

New Method Efficiently Safeguards Sensitive AI Training Data

Adam Zewe | MIT News

The approach maintains an AI model’s accuracy while ensuring attackers can’t extract secret information.

Analog Compute-in-Memory Accelerators for Deep Learning

Wednesday, April 30, 2025 | 12:00 - 1:00pm ET

Hybrid

Zoom & MIT Campus