Wednesday, September 14, 2022 | 12:00 – 1:00pm ET, Grier Room 34-401

Speaker: Murat Onen, MIT

Join members of the MIT, MTL, and AI Hardware Program communities

for the first Joint MTL/AI Hardware Program Seminar

Abstract: Analog deep-learning processors can provide orders of magnitude higher processing speed and energy efficiency compared to traditional digital counterparts. This is imperative for the promise of artificial intelligence to be realized. However, the implementation of analog processors faces a significant barrier comprising two coupled components: 1) the absence of devices that satisfy stringent algorithm-imposed demands and 2) algorithms that can tolerate inevitable device nonidealities. This talk will present major advancements along both directions: a novel near-ideal device technology and a superior neural network training algorithm. The devices first realized here are CMOS-compatible nanoscale protonic programmable resistors that incorporate the benefits of nanoionics with extreme acceleration of ion transport under strong electric fields. Enabled by a material-level breakthrough of utilizing phosphosilicate glass (PSG) as a proton electrolyte, these devices achieve controlled proton intercalation in nanoseconds with high energy-efficiency. Separately, a theoretical analysis explains the infamous incompatibility between asymmetric device modulation and conventional neural network training algorithms. By establishing a powerful analogy with classical mechanics, a novel method, Stochastic Hamiltonian Descent, has been developed to exploit device asymmetry as a useful feature instead. In combination, the two developments presented in this thesis can be effective in ultimately realizing the potential of analog deep learning.

Speaker Bio: Murat Onen is a Postdoctoral Researcher at Massachusetts Institute of Technology (MIT). He holds a PhD degree in Electrical Engineering and Computer Science from MIT. His research focuses on devices, architectures, and algorithms for analog deep learning which has led to 16 patents and numerous publications to date. Currently, he focuses on developing nanoprotonic programmable resistors and specialized training algorithms for analog crossbar accelerators.

Explore

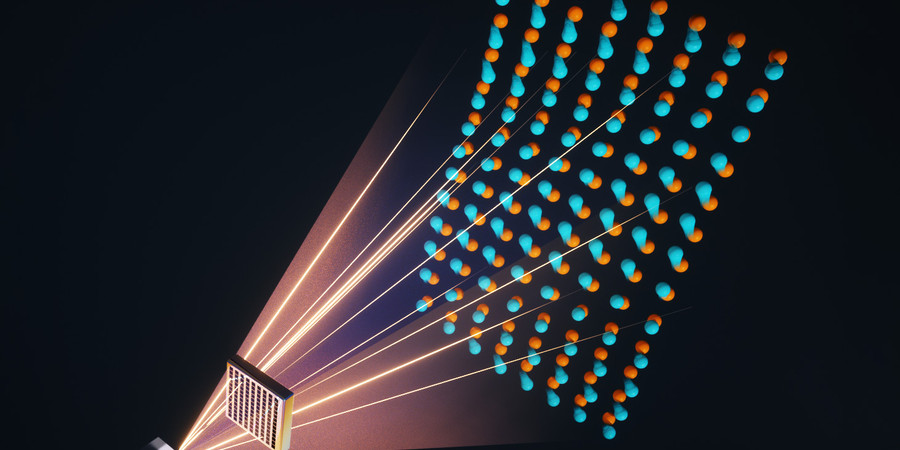

Photonic Processor Could Enable Ultrafast AI Computations with Extreme Energy Efficiency

Adam Zewe | MIT News

This new device uses light to perform the key operations of a deep neural network on a chip, opening the door to high-speed processors that can learn in real-time.

AI Method Radically Speeds Predictions of Materials’ Thermal Properties

Adam Zewe | MIT News

The approach could help engineers design more efficient energy-conversion systems and faster microelectronic devices, reducing waste heat.

A New Way to Let AI Chatbots Converse All Day without Crashing

Adam Zewe | MIT News

Researchers developed a simple yet effective solution for a puzzling problem that can worsen the performance of large language models such as ChatGPT.