Principal Investigator: Anantha Chandrakasan

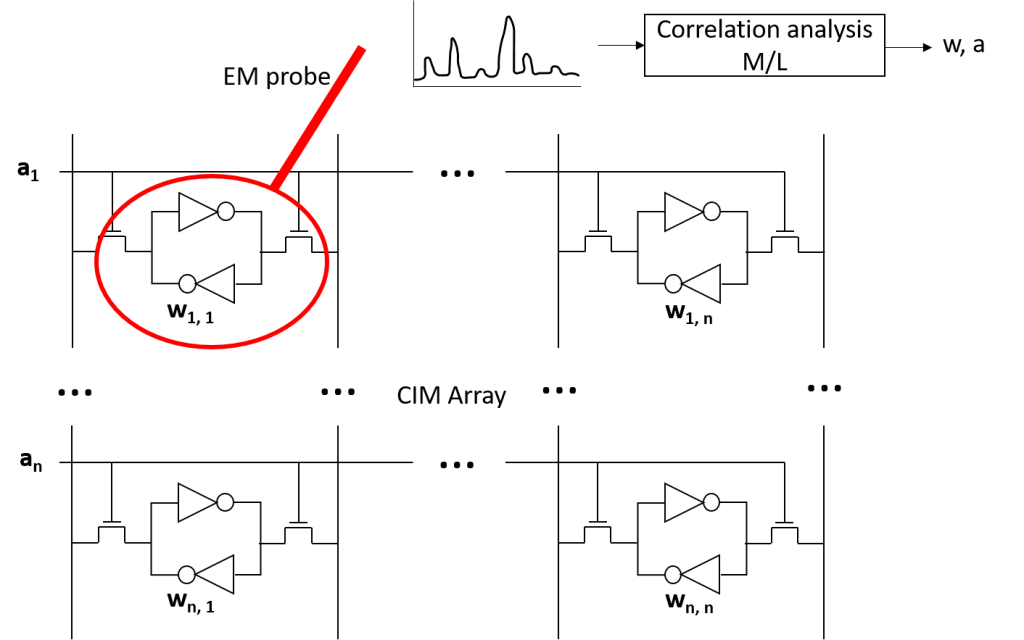

Many edge machine learning accelerators are responsible for processing and storing sensitive data that could be of value to attackers. For example, home security systems process inputs that contain many images of passerby’s faces. Furthermore, once an attacker is aware of the actual weights used to determine whether an intruder is attempting to break-in, the attacker can stage an image input that allows for successful access into the home. Previous work has shown the ability to use physical side channels to identify layers and extract secret weights in neural network architectures. However, there are differences in the information leakage for realistic applications in demanding and variable scenarios, where general machine learning accelerator chips with configurable processing must be used. Furthermore, there have been no studies on how to protect these machine learning physical side channel vulnerabilities through circuit level.

Many edge machine learning accelerators are responsible for processing and storing sensitive data that could be of value to attackers. For example, home security systems process inputs that contain many images of passerby’s faces. Furthermore, once an attacker is aware of the actual weights used to determine whether an intruder is attempting to break-in, the attacker can stage an image input that allows for successful access into the home. Previous work has shown the ability to use physical side channels to identify layers and extract secret weights in neural network architectures. However, there are differences in the information leakage for realistic applications in demanding and variable scenarios, where general machine learning accelerator chips with configurable processing must be used. Furthermore, there have been no studies on how to protect these machine learning physical side channel vulnerabilities through circuit level.

This project plans to investigate side channel vulnerabilities and develop protections for at-edge custom in-memory computing (IMC) integrated circuits. This will involve exploring vulnerabilities of memory to physical side channel as well as protections for these. This will then be extended to evaluate and protect the various components of IMC, including SRAM compute, analog memory interfaces, additional near memory compute circuitry, and other peripherals.

In collaboration with: Maitreyi Ashok

Explore

MIT Engineers Advance Toward a Fault-tolerant Quantum Computer

Adam Zewe | MIT News

Researchers achieved a type of coupling between artificial atoms and photons that could enable readout and processing of quantum information in a few nanoseconds.

The Road to Gate-All-Around CMOS

Monday, April 14, 2025 | 10:00 AM to 11:00 AM

In-Person

Haus Room (36-428)

50 Vassar Street Cambridge, MA

2025 MIT AI Hardware Program Annual Symposium

Monday, March 31, 2025 | 10:00 AM - 3:30 PM ET

Multiple Speakers