Mosaic ML

The goal of Mosaic ML is making ML training efficient, and to improve efficiency of neural network training with algorithmic methods that deliver speed, boost quality and reduce cost. Built by a mosaic of passionate technologists, including MIT principal investigator Michael Carbin, the team was brought together to discover new ways to make Machine Learning training better, faster, and more efficient in order to accelerate breakthroughs.

The MosaicML Composer is an open source deep learning library purpose-built to make it easy to add algorithmic methods and compose them together into novel recipes that speed up model training and improve model quality. This library includes 20 methods for computer vision and natural language processing in addition to standard models, datasets, and benchmarks.

Detailed information available from Mosaic ML.

Explore

AI Tool Generates High-Quality Images Faster Than State-of-the-Art Approaches

Adam Zewe | MIT News

Researchers fuse the best of two popular methods to create an image generator that uses less energy and can run locally on a laptop or smartphone.

New Security Protocol Shields Data From Attackers During Cloud-based Computation

Adam Zewe | MIT News

The technique leverages quantum properties of light to guarantee security while preserving the accuracy of a deep-learning model.

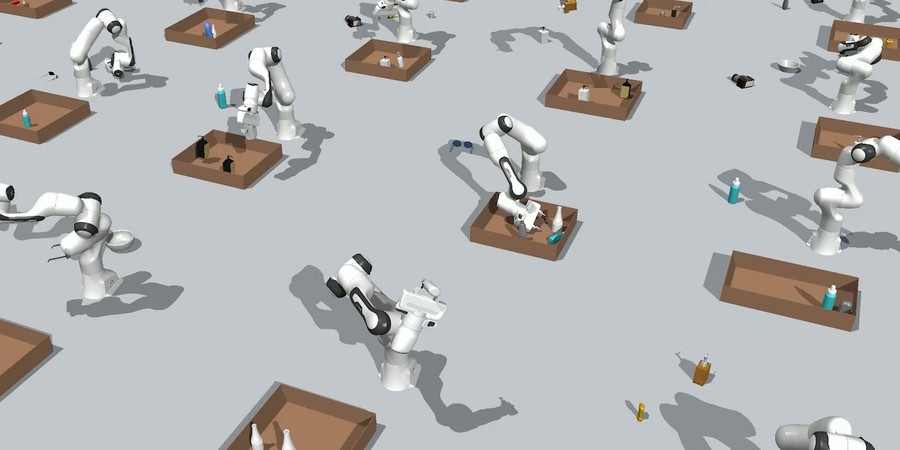

New Technique Helps Robots Pack Objects into a Tight Space

Adam Zewe | MIT News

Researchers coaxed a family of generative AI models to work together to solve multistep robot manipulation problems.