Adam Zewe | MIT News Office

July 28, 2022

As scientists push the boundaries of machine learning, the amount of time, energy, and money required to train increasingly complex neural network models is skyrocketing. A new area of artificial intelligence called analog deep learning promises faster computation with a fraction of the energy usage.

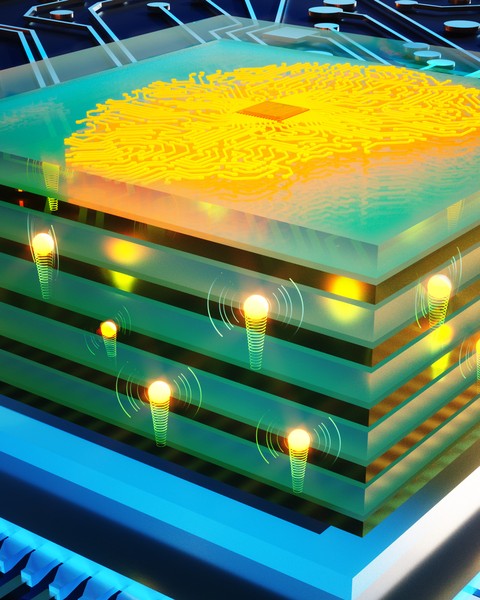

Programmable resistors are the key building blocks in analog deep learning, just like transistors are the core elements for digital processors. By repeating arrays of programmable resistors in complex layers, researchers can create a network of analog artificial “neurons” and “synapses” that execute computations just like a digital neural network. This network can then be trained to achieve complex AI tasks like image recognition and natural language processing.

A multidisciplinary team of MIT researchers set out to push the speed limits of a type of human-made analog synapse that they had previously developed. They utilized a practical inorganic material in the fabrication process that enables their devices to run 1 million times faster than previous versions, which is also about 1 million times faster than the synapses in the human brain.

Complete article from MIT News.

Explore

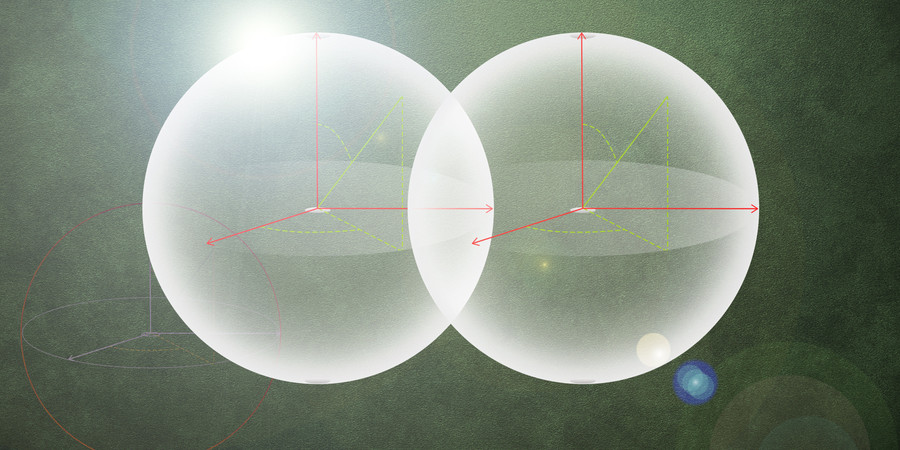

MIT Engineers Advance Toward a Fault-tolerant Quantum Computer

Adam Zewe | MIT News

Researchers achieved a type of coupling between artificial atoms and photons that could enable readout and processing of quantum information in a few nanoseconds.

Energy-Efficient and Environmentally Sustainable Computing Systems Leveraging Three-Dimensional Integrated Circuits

Wednesday, May 14, 2025 | 12:00 - 1:00pm ET

Hybrid

Zoom & MIT Campus

The Road to Gate-All-Around CMOS

Monday, April 14, 2025 | 10:00 AM to 11:00 AM

In-Person

Haus Room (36-428)

50 Vassar Street Cambridge, MA