October 30, 2023

Researchers from MIT and NVIDIA have developed two techniques that accelerate the processing of sparse tensors, a type of data structure that’s used for high-performance computing tasks. The complementary techniques could result in significant improvements to the performance and energy-efficiency of systems like the massive machine-learning models that drive generative artificial intelligence.

Tensors are data structures used by machine-learning models. Both of the new methods seek to efficiently exploit what’s known as sparsity — zero values — in the tensors. When processing these tensors, one can skip over the zeros and save on both computation and memory. For instance, anything multiplied by zero is zero, so it can skip that operation. And it can compress the tensor (zeros don’t need to be stored) so a larger portion can be stored in on-chip memory.

However, there are several challenges to exploiting sparsity. Finding the nonzero values in a large tensor is no easy task. Existing approaches often limit the locations of nonzero values by enforcing a sparsity pattern to simplify the search, but this limits the variety of sparse tensors that can be processed efficiently.

Another challenge is that the number of nonzero values can vary in different regions of the tensor. This makes it difficult to determine how much space is required to store different regions in memory. To make sure the region fits, more space is often allocated than is needed, causing the storage buffer to be underutilized. This increases off-chip memory traffic, which increases energy consumption.

Complete article from MIT News.

Explore

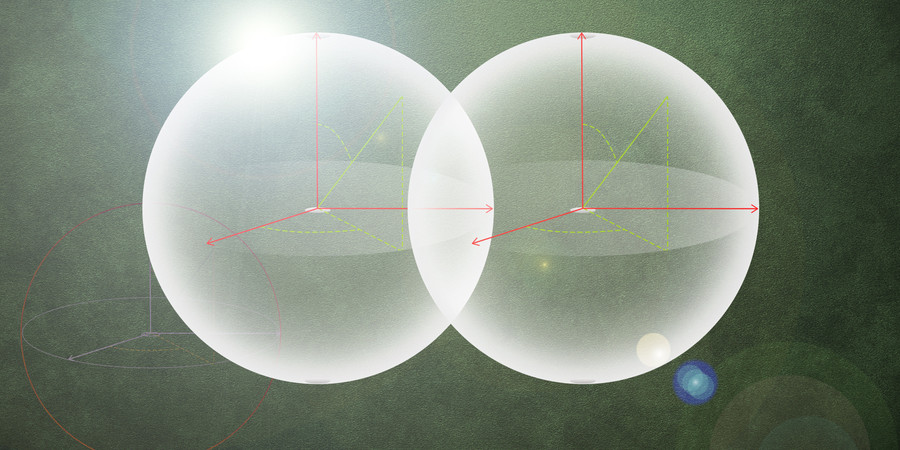

MIT Engineers Advance Toward a Fault-tolerant Quantum Computer

Adam Zewe | MIT News

Researchers achieved a type of coupling between artificial atoms and photons that could enable readout and processing of quantum information in a few nanoseconds.

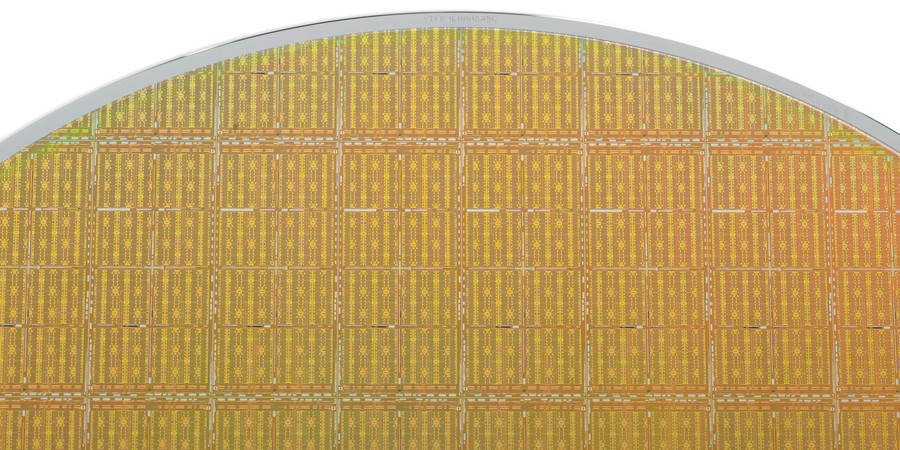

New Chip Tests Cooling Solutions for Stacked Microelectronics

Kylie Foy | MIT Lincoln Laboratory

Preventing 3D integrated circuits from overheating is key to enabling their widespread use.

Analog Compute-in-Memory Accelerators for Deep Learning

Wednesday, April 30, 2025 | 12:00 - 1:00pm ET

Hybrid

Zoom & MIT Campus