Daniel Ackerman | MIT News Office

Researcher Jonathan Frankle and his “lottery ticket hypothesis” posits that, hidden within massive neural networks, leaner subnetworks can complete the same task more efficiently. The trick is finding those “lucky” subnetworks, dubbed winning lottery tickets. In a new paper, Frankle and colleagues discovered such subnetworks lurking within BERT, a state-of-the-art neural network approach to natural language processing (NLP). As a branch of artificial intelligence, NLP aims to decipher and analyze human language, with applications like predictive text generation or online chatbots. In computational terms, BERT is bulky, typically demanding supercomputing power unavailable to most users. Access to BERT’s winning lottery ticket could level the playing field, potentially allowing more users to develop effective NLP tools on a smartphone.

Complete article from MIT News.

Explore

Photonic Processor Could Enable Ultrafast AI Computations with Extreme Energy Efficiency

Adam Zewe | MIT News

This new device uses light to perform the key operations of a deep neural network on a chip, opening the door to high-speed processors that can learn in real-time.

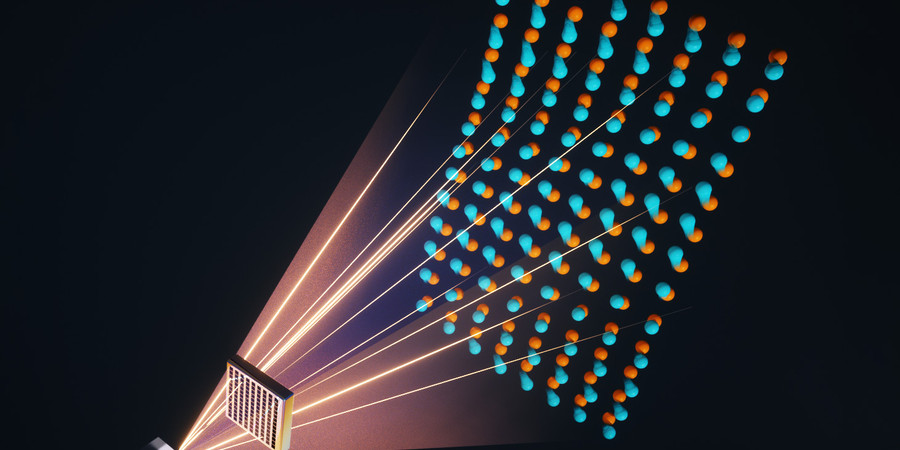

AI Method Radically Speeds Predictions of Materials’ Thermal Properties

Adam Zewe | MIT News

The approach could help engineers design more efficient energy-conversion systems and faster microelectronic devices, reducing waste heat.

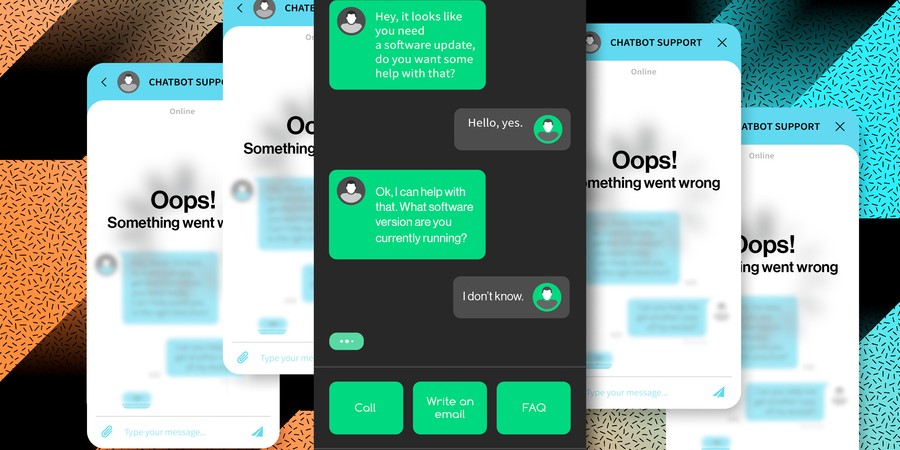

A New Way to Let AI Chatbots Converse All Day without Crashing

Adam Zewe | MIT News

Researchers developed a simple yet effective solution for a puzzling problem that can worsen the performance of large language models such as ChatGPT.