Sparse tensor algebra is an important computation kernel in many popular applications, such as image classification and language processing. The sparsity in such kernels motivates the development of many sparse tensor accelerators. However, despite the abundant existing proposals, there has not been a systematic way to understand, model, and develop sparse tensor accelerators. To address the above limitations, we first present a well-defined taxonomy of sparsity-related acceleration features to allow a systematic understanding of the sparse tensor accelerator design space. Based on the taxonomy, we propose Sparseloop, the first analytical modeling tool for fast, accurate, and flexible evaluations of sparse tensor accelerators, enabling early-stage exploration of the large and diverse design space. Employing Sparseloop, we search the design space and present an efficient and flexible deep neural network (DNN) accelerator that accelerates DNNs with a novel sparsity pattern, called hierarchical structured sparsity, with the key insight that we can efficiently accelerate diverse degrees of sparsity by having them hierarchically composed of simple sparsity patterns.

Speaker

Nellie Wu

Nellie Wu is a PhD Candidate in Electrical Engineering and Computer Science at Massachusetts Institute of Technology, advised by Professor Joel Emer and Professor Vivienne Sze. Wu received an MS in Electrical Engineering and Computer Science from MIT, and a BA in Electrical Engineering and Computer Science from Cornell University. Her current research interests include computer architecture and computer systems. More specifically, modeling and designing energy-efficient hardware accelerators for data and computation-intensive tensor algebra applications, such as deep neural networks and scientific simulations.

Explore

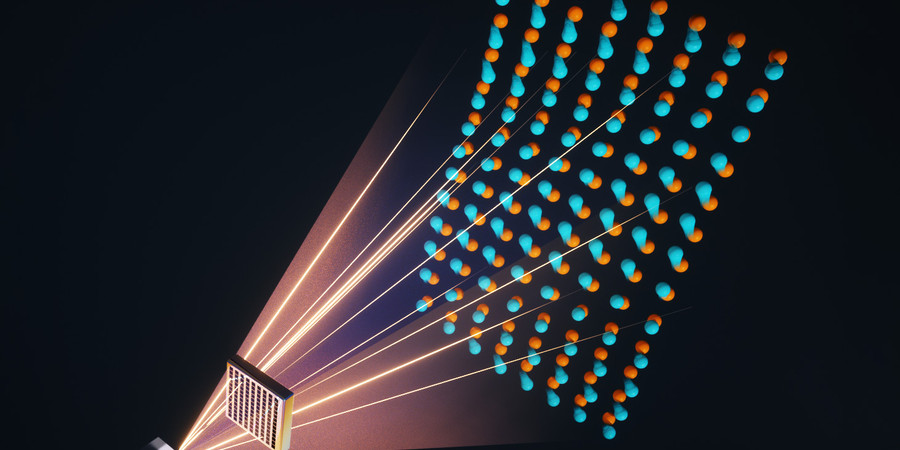

Photonic Processor Could Enable Ultrafast AI Computations with Extreme Energy Efficiency

Adam Zewe | MIT News

This new device uses light to perform the key operations of a deep neural network on a chip, opening the door to high-speed processors that can learn in real-time.

AI Method Radically Speeds Predictions of Materials’ Thermal Properties

Adam Zewe | MIT News

The approach could help engineers design more efficient energy-conversion systems and faster microelectronic devices, reducing waste heat.

A New Way to Let AI Chatbots Converse All Day without Crashing

Adam Zewe | MIT News

Researchers developed a simple yet effective solution for a puzzling problem that can worsen the performance of large language models such as ChatGPT.