AI Tool Generates High-Quality Images Faster Than State-of-the-Art Approaches

Adam Zewe | MIT News

Researchers fuse the best of two popular methods to create an image generator that uses less energy and can run locally on a laptop or smartphone.

New Security Protocol Shields Data From Attackers During Cloud-based Computation

Adam Zewe | MIT News

The technique leverages quantum properties of light to guarantee security while preserving the accuracy of a deep-learning model.

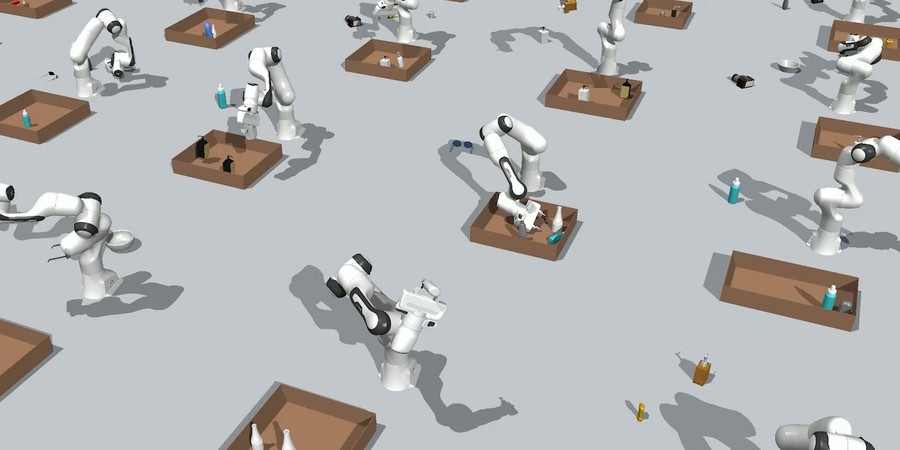

New Technique Helps Robots Pack Objects into a Tight Space

Adam Zewe | MIT News

Researchers coaxed a family of generative AI models to work together to solve multistep robot manipulation problems.

Researchers Create a Tool for Accurately Simulating Complex Systems

Adam Zewe | MIT News Office

The system they developed eliminates a source of bias in simulations, leading to improved algorithms that can boost the performance of applications.

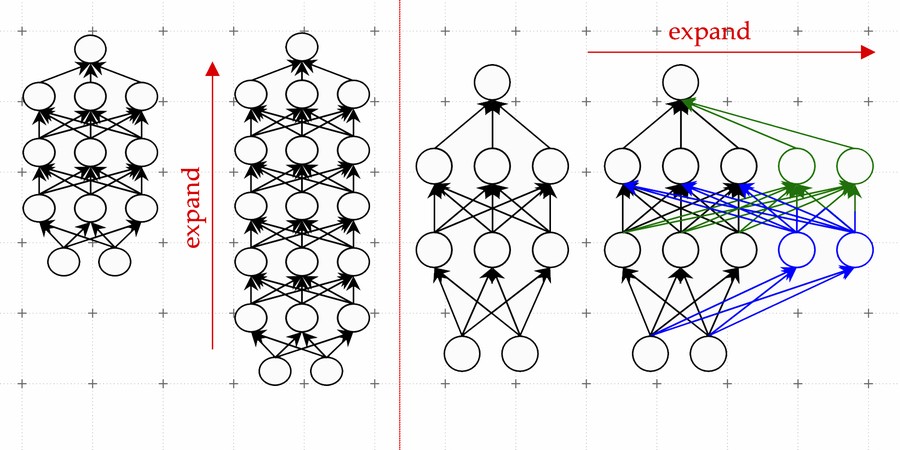

Learning to Grow Machine-learning Models

Adam Zewe | MIT News Office

New LiGO technique accelerates training of large machine-learning models, reducing the monetary and environmental cost of developing AI applications.

New Method Accelerates Data Retrieval in Huge Databases

Adam Zewe | MIT News Office

Researchers use machine learning to build faster and more efficient hash functions, which are a key component of databases.

Making Computing More Brain-like

Wednesday, March 15, 2023 | 12:00 - 1:00pm ET

MIT Building 36, Room 426, Allen Room

Hybrid

Speaker: Mike Davies, Intel

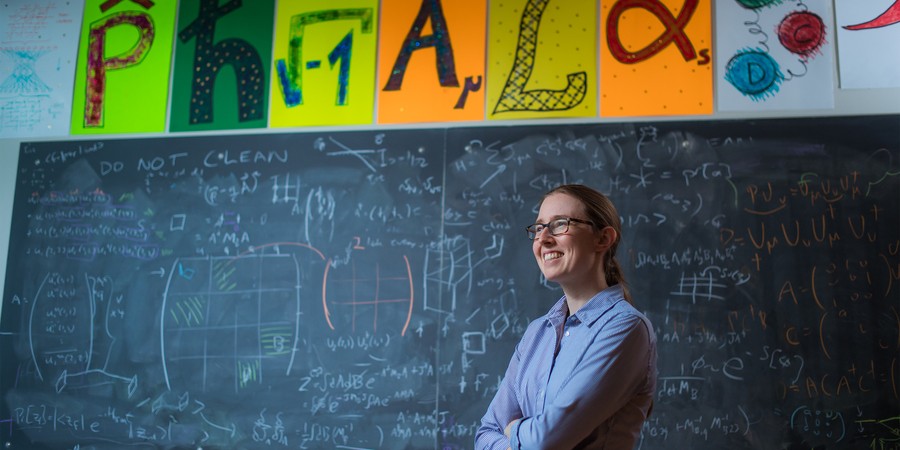

Phiala Shanahan Is Seeking Fundamental Answers About Our Physical World

Jennifer Chu | MIT News Office

With supercomputers and machine learning, the physicist aims to illuminate the structure of everyday particles and uncover signs of dark matter.

Thesis Defense: Delocalized Photonic Deep Learning on the Internet’s Edge

Monday, February 6, 2023 | 5:00pm - 7:00pm ET

Speaker: Alex Sludds, MIT PhD Candidate

Breaking the Scaling Limits of Analog Computing

Adam Zewe | MIT News Office

MIT researchers have developed a new technique could diminish errors that hamper the performance of super-fast analog optical neural networks.