Ghobadi Wins SIGCOMM Rising Star Award

Alex Shipps | MIT CSAIL News

Manya Ghobadi aims to make large-scale computer networks more efficient, ultimately developing adaptive smart networks.

Deep Learning with Light

Adam Zewe | MIT News Office

A new novel piece of hardware, called a smart transceiver, uses silicon photonics to accelerate machine-learning computations on smart speakers and other low-power connected devices.

TinyML and Efficient Deep Learning: Course 6.S965

Fall 2022 | Tuesdays & Thursdays, 3:30 - 5:00pm ET

Speaker: Song Han, MIT

INFER Fireside Chat – Reasserting U.S. Leadership in Microelectronics

Saturday, May 11, 2022 | 2:00pm - 3:00pm ET

Speaker: Jesús del Alamo, MIT

Spins, Bits, and Flips: Essentials for High-Density Magnetic Random-Access Memory

Tuesday, April 19, 2022 | 11:00am ET, von Hippel Room, 13-2137

Speaker: Tiffany S. Santos, Western Digital Corporation

A New Programming Language for High-performance Computers

Steve Nadis | MIT CSAIL

With a tensor language prototype, “speed and correctness do not have to compete ... they can go together, hand-in-hand.”

Memristor-based Hybrid Analog-Digital Computing Platform for Mobile Robotics

Monday, February 28, 2022 | 1:00 PM – 2:00 PM ET

Speaker: Wei Wu, University of Southern California

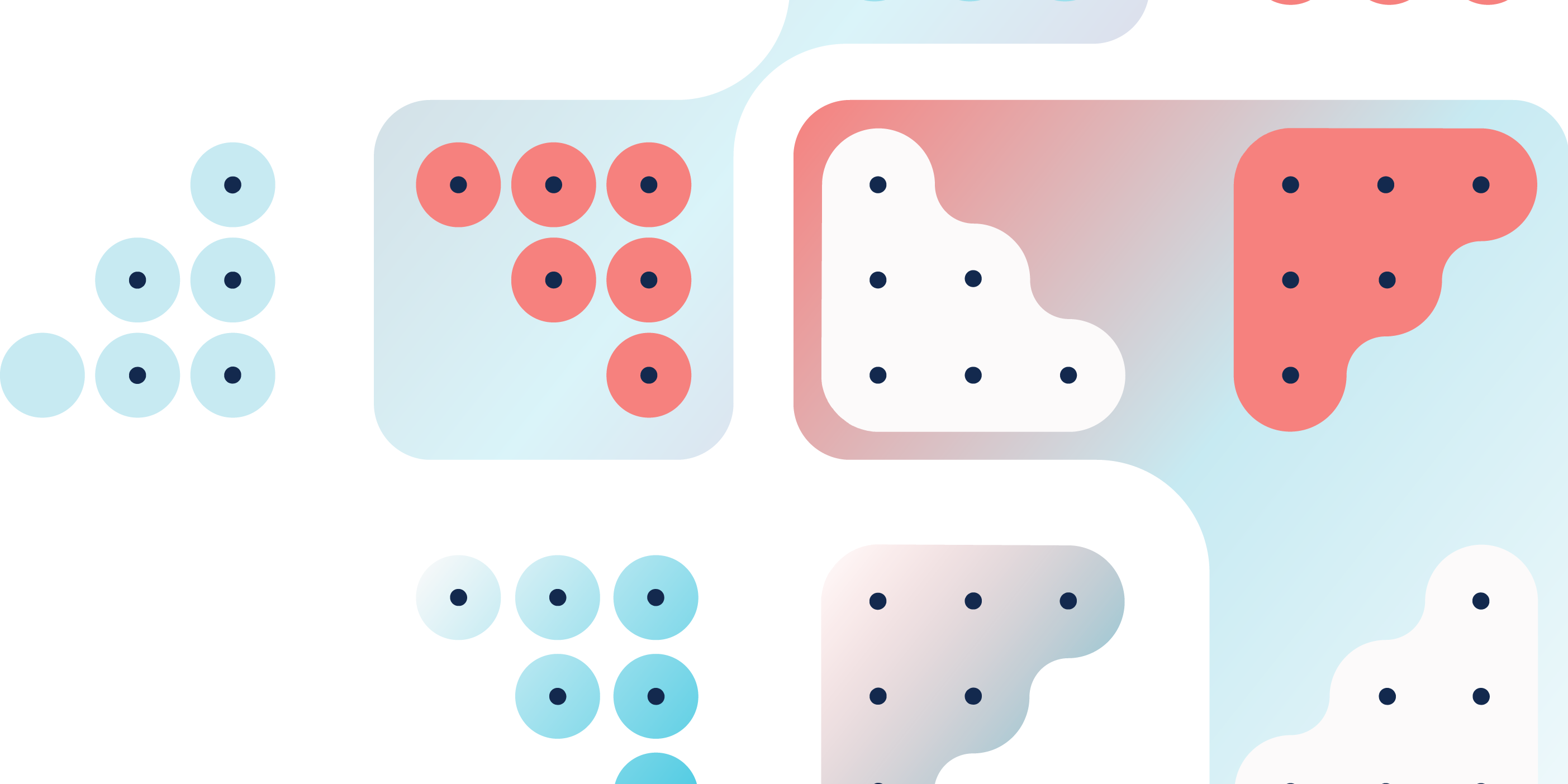

TinyML is Bringing Neural Networks to Small Microcontrollers

Ben Dickson | TechTalks

Tiny machine learning, or TinyML, suited for devices with limited memory and processing power, and in which internet connectivity is either non-present or limited.

AI’s Smarts Now Come With a Big Price Tag

Will Knight | Wired Magazine

As language models get more complex, they also get more expensive to create and run. One option is a startup, Mosaic ML, spun out of MIT that is developing software tricks designed to increase the efficiency of machine-learning training.

Mosaic ML

Mosaic ML

The goal of Mosaic ML is making ML training efficient, and to improve efficiency of neural network training with algorithmic methods that deliver speed, boost quality and reduce cost.