Photonic Processor Could Enable Ultrafast AI Computations with Extreme Energy Efficiency

Adam Zewe | MIT News

This new device uses light to perform the key operations of a deep neural network on a chip, opening the door to high-speed processors that can learn in real-time.

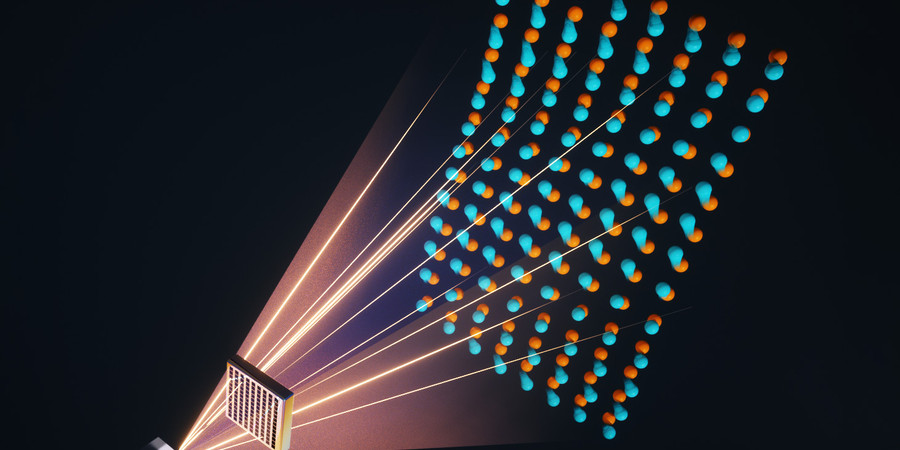

AI Method Radically Speeds Predictions of Materials’ Thermal Properties

Adam Zewe | MIT News

The approach could help engineers design more efficient energy-conversion systems and faster microelectronic devices, reducing waste heat.

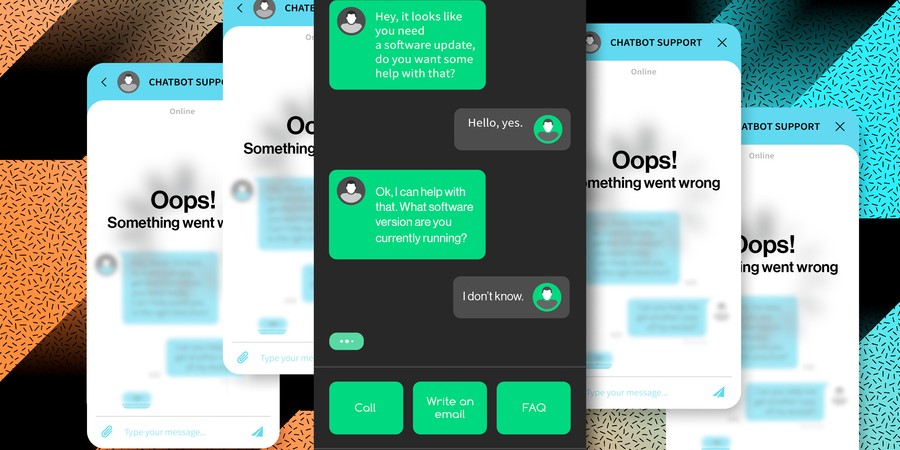

A New Way to Let AI Chatbots Converse All Day without Crashing

Adam Zewe | MIT News

Researchers developed a simple yet effective solution for a puzzling problem that can worsen the performance of large language models such as ChatGPT.

Technique Enables AI on Edge Devices to Keep Learning Over Time

Adam Zewe | MIT News

With the PockEngine training method, machine-learning models can efficiently and continuously learn from user data on edge devices like smartphones.

Memristive Crossbar Arrays for Analog In-Memory Computing and Neuromorphic Engineering

Wednesday, October 11, 2023 | 12:00 - 1:00pm ET

Hybrid

Grier A (34-401A)

50 Vassar Street Cambridge, MA

Helping Computer Vision and Language Models Understand What They See

Adam Zewe | MIT News

Researchers use synthetic data to improve a model’s ability to grasp conceptual information, which could enhance automatic captioning and question-answering systems.

Building Tools To Learn Human Brain Processes

Imagination + AI, MIT CSAIL, Forbes

The CSAIL Imagination in Action @ MIT Symposium aimed to educate and motivate entrepreneurs on building successful AI-focused companies, attracting a diverse audience passionate about AI's transformative potential.

Machine-learning System Based on Light Could Yield More Powerful, Efficient Large Language Models

Elizabeth A. Thomson | Materials Research Laboratory

MIT system demonstrates greater than 100-fold improvement in energy efficiency and a 25-fold improvement in compute density compared with current systems.

Systematic Modeling and Design of Sparse Tensor Accelerators

Friday, May 05, 2023

Nellie Wu, MIT

Busy GPUs: Sampling and Pipelining Method Speeds Up Deep Learning on Large Graphs

Lauren Hinkel | MIT-IBM Watson AI Lab

New technique significantly reduces training and inference time on extensive datasets to keep pace with fast-moving data in finance, social networks, and fraud detection in cryptocurrency.