AI’s Smarts Now Come With a Big Price Tag

Will Knight | Wired Magazine

As language models get more complex, they also get more expensive to create and run. One option is a startup, Mosaic ML, spun out of MIT that is developing software tricks designed to increase the efficiency of machine-learning training.

PointAcc: Efficient Point Cloud Accelerator

Tuesday, October 19, 2021 | 3:00pm – 4:15pm ET

Speakers: Yujun Lin and Song Han, MIT

Natural Language Processing Accelerator for Transformer Models

Song Han, Anantha Chandrakasan

This project aims to develop efficient processors for natural language processing directly on an edge device to ensure privacy, low latency and extended battery life. The goal is to accelerate the entire transformer model (as opposed to just the attention mechanism) to reduce data movement across layers.

Boltzmann Network with Stochastic Magnetic Tunnel Junctions

Luqiao Liu, Marc Baldo

Networks formed by devices with intrinsic stochastic switching properties can be used to build Boltzmann machine, which has great efficiencies compared with traditional von Neumann architecture for cognitive computing due to the benefit from statistical mechanics of building blocks.

Electrochemistry and Material Science of Proton-based Electrochemical Synapses

Bilge Yildiz, Ju Li

Electrochemical ionic-electronic devices have an immense potential to enable a new domain of programmable hardware for machine intelligence.

A Framework to Evaluate Energy Efficiency and Performance of Analog Neural Networks

Vivienne Sze, Joel Emer

This project pursues an integrated framework that includes energy-modeling and performance evaluation tools to systematically explore and estimate the energy-efficiency and performance of Analog Neural Network architectures with full consideration of the electrical characteristics of the synaptic elements and interface circuits.

CMOS-Compatible Ferroelectric Synapse Technology for Analog Neural Networks

Jesús del Alamo

This research project investigates a new ferroelectric synapse technology based on metal oxides that is designed to be fully back-end CMOS compatible and promises operation with great energy efficiency.

Crossing the Hardware-Software Divide for Faster AI

Thursday, April 29, 2021 | 12pm - 1pm ET

Panel Discussion: Vivienne Sze, Song Han, Aude Oliva

Using Artificial Intelligence to Generate 3D Holograms in Real-time

Daniel Ackerman | MIT News Office

Despite years of hype, virtual reality headsets have yet to topple TV or computer screens as the go-to devices for video viewing.

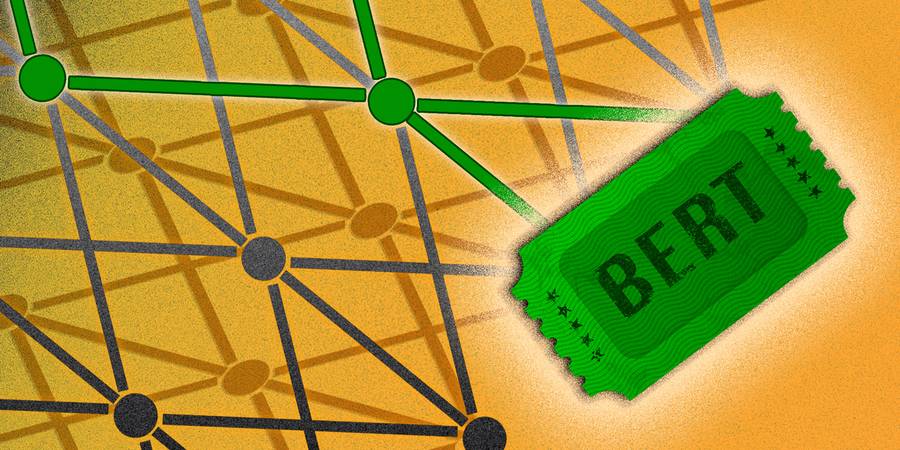

Shrinking Massive Neural Networks Used to Model Language

Daniel Ackerman | MIT News Office

Researcher Jonathan Frankle and his “lottery ticket hypothesis” posits that, hidden within massive neural networks, leaner subnetworks can complete the same task more efficiently.